-

Calculating the Lighting

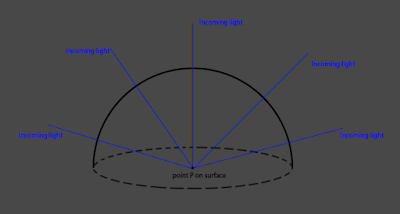

To calculate the irradiance that hit on the point we need to calculate the sum of all the lights from all directions that hit on the point.

To do this we need to calculate the integral of the incoming light over the dome.

given light strength on point p from direction ωi : L(p,ωi)

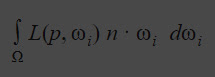

With all the knowledge of BRDF and irradiance, the Cook-Torrance reflectance equation will look like this:

Direct Light

For direct light, it is simple, we don't need to consider the integral over the whole dome.We just need to scale the light by cos theta (The angle between the light vector and normal vector).

IBL

The environmental light is a bit tricky. Environmental light is the whole environment is light source and lit up the target surface. It is crucial to for realistic rendering. But calculate all light from all direction is nearly impossible in the realtime rendering (I say nearly here because the latest hardware and framework like RTX starts to support real-time raytrace rendering ) we use the environmental texture as the light source to mimic the environment light. This technique is call image base lighting or IBL.Calculating intergral is very heavy in real time, to solve this problem,

we divide the render equation into two part :

left side is the diffuse part, right side is the specular part.

diffuse IBL

The result of the diffuse IBL only relate to ωi, sample direction on the environment map. This enable us precalculate the integral of the intergral of the the texture and svae it to a new texture, this is called irradiance map.In run time, we do :

an image from Wave engine to show the different between a environment map and irradiance map :

my inplementation detail :

I don't have the preculculated irradiance map , I could have write a CPP programme to caiculate it on CPU...

I simply use the mipmap to get the blur effect

Specular IBL

divide the right part into two parts:

- Pre-filtered environmental map

The left part is called Pre-filtered environmental map, it calculate the sum of lights contribute to the final reflection. In diffuse light, lights from all direction are calculated. For specular only small part of the light are affecting the result. Perfect mirror has perfecti reflection, light can only be seen on reflect vector ; rough metal has a scattered reflection because more light can be observed.

The result will be

The higher the mipmap is , more sample is used to make the integral, this also means rougher the surface is.

- BRDF intergration map

My implementation detail :

I use importance sampling to approximate the radiance intergral

I reference Real Shading in Unreal Engine 4 to implement the specular IBL